Flaws of Chatbots as a User Interface for AI

Chatbots have emerged as one of the most common interfaces. They’re everywhere—from customer service platforms to virtual assistants. At first glance, they seem like the perfect tool, bridging the gap between complex AI systems and everyday users. But, if we dig a little deeper, some significant flaws emerge that make chatbots a less-than-ideal interface for large language models (LLMs). Here’s a closer look at three critical issues that highlight why the chatbot UI might be holding us back.

1. The Illusion of Human-Like Conversation

Chatbots are designed to simulate human conversation. This might sound like a good thing, but it has a big downside. The problem is that when something looks and feels like a human conversation, we’re more likely to trust it. We get used to texting and chatting with people all day, so when a chatbot mimics that style, we start to believe what it says a bit too easily. This can lead to users overestimating the accuracy of the chatbot’s responses.

Imagine you’re texting a friend. You trust that what they say is based on some level of personal knowledge or experience. When a chatbot mimics this style, you might subconsciously apply the same level of trust. But unlike your friend, a chatbot doesn’t have personal experiences or knowledge. It generates responses based on patterns and data, which might not always be accurate. If we had a different interface—something that didn’t look so much like a human conversation—we might approach the information with a more critical eye, and that could help mitigate the risk of over-trusting the AI’s outputs.

2. Stagnant UI in a Rapidly Evolving Field

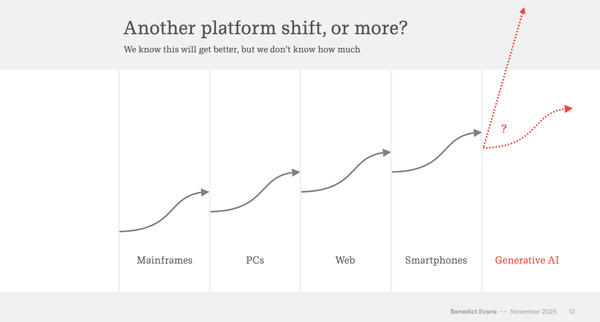

LLMs are getting smarter. They’re evolving quickly, gaining new capabilities, and becoming more sophisticated. But here’s the catch: the chatbot interface isn’t evolving at the same pace. This creates a disconnect. While the underlying technology becomes more powerful, the way we interact with it stays the same.

For example, companies like Anthropic have started exploring new ways to present information by adding features like a canvas where a chatbot can display a chart. This is a step in the right direction, but overall, the chatbot interface remains static. It’s still just a text box where you input questions and get answers.

This static nature of the interface can be misleading. If you’ve been using ChatGPT for a while, you know there’s a difference between versions, say ChatGPT-3.5 and ChatGPT-4. But if you’re a casual user, you might not notice this evolution because the interface doesn’t show it. There’s no visual cue or significant change in the UI to signal that the AI behind the chatbot has improved. This is a stark contrast to other industries. Take the iPhone, for instance. Every new model comes with a design tweak, something that visually sets it apart from the previous one, signaling to users that it’s new and improved. The same goes for cars—manufacturers introduce new designs to show that the model has changed. But with chatbots, we don’t see that kind of evolution in the interface, even though the underlying models are advancing.

This is a missed opportunity. The UI should reflect the AI’s growth, making it easier for users to understand that they’re dealing with a more advanced system. Without this, people might not realize the full potential of the AI they’re interacting with, leading to underutilization of its capabilities.

3. Advanced Knowledge Required for Effective Use

Another significant flaw with chatbots is that they require a certain level of expertise to use effectively. At first glance, it might seem like anyone can type a question into a chatbot and get a good answer. But the reality is more complicated. To really get the best out of an LLM through a chatbot interface, you need to understand how these models work.

Let’s take an example: Imagine two people sitting side by side, both using the same chatbot. One person has spent hours reading about LLMs, understanding how to craft prompts to get the best results. The other person has no such background—they’re just typing in questions as they think of them. The first person is likely to get much better responses because they know how to phrase their questions and what to ask. The second person might end up with less accurate or useful information, not because the AI is flawed, but because they don’t know how to interact with it effectively.

This creates a gap. The AI’s potential is there, but only a few people can fully access it. The rest might get frustrated or simply accept subpar answers, not realizing that better responses are possible with the right knowledge. This issue is fundamentally inequitable. If the AI is truly “deployable intelligence,” then we should be working on making it accessible to everyone, regardless of their level of expertise. The UI should help bridge this gap, making it easier for all users to get the most out of the AI without needing to dive deep into technical details.

The Need for New Business Models and UI Designs

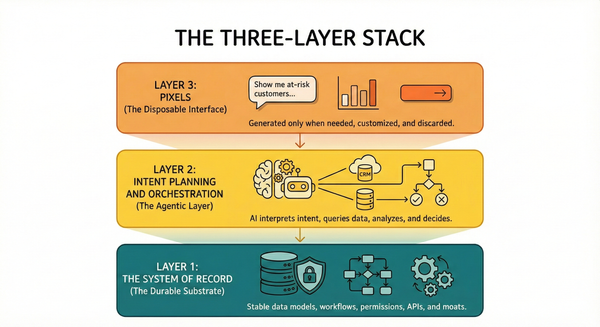

These flaws point to a broader issue: the need for new business models and user interface designs in the AI space. We’re at a point where the traditional chatbot UI might not be the best way to interact with increasingly complex and powerful AI systems. As LLMs continue to evolve, their interfaces need to evolve as well, reflecting the changes in capability and making it easier for users to engage with them effectively.

Consider this: the way we interact with AI could be vastly different. Instead of a simple text box, imagine an interface that adapts to the user’s needs, offering visual cues, interactive elements, and other features that make it clear what the AI can do and how to best utilize it. Such an interface could guide users, helping them understand the AI’s strengths and limitations, and ensuring that they’re getting the best possible results without needing to be experts in AI.

The business models surrounding AI could also change. Right now, many AI tools are marketed as one-size-fits-all solutions, but that’s not necessarily the best approach. We might need more tailored solutions, designed for different levels of expertise and different use cases. This could involve tiered services, where more advanced users get access to more complex tools, while casual users are provided with a more guided, user-friendly experience.

Conclusion: Rethinking the Future of AI Interfaces

In summary, while chatbots have been a useful interface for interacting with AI, they come with significant flaws that we can’t ignore. The illusion of human-like conversation can lead to misplaced trust. The static nature of the UI fails to reflect the rapid advancements in LLMs. And the need for advanced knowledge to effectively use these tools creates a gap that leaves many users behind.

As we move forward, it’s clear that we need to rethink how we design AI interfaces. We should focus on creating UIs that evolve alongside the technology, making it easier for all users to engage with AI in meaningful ways. This might involve exploring new types of interfaces, developing business models that cater to different user needs, and ultimately, ensuring that the incredible potential of AI is accessible to everyone. The future of AI is bright, but to fully realize it, we need to break away from the limitations of the chatbot UI and explore new possibilities.