Building Smarter Apps: The Power of Large Language Models (LLMs)

When you buy a car, you think about what’s under the hood. Some care about horsepower, while others focus on fuel efficiency. Similarly, when building applications—especially AI-driven ones—what’s under the hood matters. Instead of an engine, you’ve got large language models (LLMs). These are the brains behind modern AI apps, continuously improving over time, much like keeping your car but getting engine upgrades and modification.

The fascinating part? These LLMs have been evolving rapidly, making a real difference in what applications can do. Tools that seemed almost sci-fi a couple of years ago are now within reach. Cursor, an AI-assisted code-writing tool, is a prime example of how these advancements are changing the game. But what’s going on behind the scenes that makes these tools so much better now? Let’s dive into some recent highlights in the world of LLMs and their impact on development.

OpenAI’s File Search: The End of Hallucination?

Let’s start with one of the most significant updates: OpenAI’s recent improvements in file search. About a year ago, there was a scandal where Air Canada’s chatbot hallucinated a policy about bereavement fares that didn’t exist. It was a disaster, leading to a lawsuit. Hallucinations like this—where AI generates false information—were common problems, especially with earlier models. But OpenAI’s new file search update changes that.

So, what’s different now? File search lets developers import external documents and convert them into embeddings. An embedding is like a compressed, vectorized version of the document’s contents. This means you can easily retrieve the right information from these documents using vector or keyword searches, which adds precision and context to AI-generated responses.

Let’s say you have a company policy or user guide. You can upload it, and OpenAI will handle the rest. When a query comes in, the AI pulls from these accurate, pre-uploaded files rather than guessing or “hallucinating” a response. This feature drastically reduces the chances of AI making up answers.

Now, some developers have already been doing this with tools like Llama Index, but what makes OpenAI’s solution special is its ease of use. No need for a large toolchain or complex setups. You can do it all within OpenAI’s ecosystem. This aligns with their goal of simplifying AI integration for developers.

Claude’s Token Count: Bigger, Better, Faster

Switching gears, let’s talk about Claude. This AI, developed by Anthropic, is another player in the LLM space. Recently, Claude doubled the token limit in its API calls to over 8,000 tokens from the previous limit of 4,000. If you’re wondering, “Why does that matter?” here’s the deal: Tokens are essentially chunks of text. The more tokens you can process, the larger the task your AI can handle.

This update is especially important if you’re writing code, working with large documents, or trying to handle more complex queries. With double the token space, Claude can now handle bigger workloads without cutting off parts of your input or output.

Even though LLMs like Claude try to avoid using the full token limit, having the extra room is useful. For instance, if you’re working on a project with extensive code or trying to write a longer article, that extra capacity comes in handy. This change has been particularly useful for developers working with Cursor, as it allows them to generate more detailed, complete outputs with less effort.

The Cursor Connection: When LLMs Get Good Enough

Cursor, a tool designed to help developers write code with minimal effort, has been benefiting from these improvements. While Cursor has been around for a while, its popularity has spiked recently, and part of that rise coincides with improvements in the underlying LLMs, like Claude and OpenAI’s models.

Here’s the thing: Cursor depends on the capabilities of LLMs. As LLMs get better at handling more tokens, retrieving information, and generating coherent, complex code, Cursor becomes more useful. Some developers argue that Cursor, along with an LLM like Claude, still can’t handle the demands of building more complex apps. However, it’s becoming increasingly possible to create simple front-end applications with minimal effort using these tools.

Even if it’s not perfect yet, the gap is closing fast. We’re seeing AI-assisted development evolve to the point where it’s becoming practical for more developers to sit down, sketch out an idea, and have the AI do a lot of the heavy lifting. What’s interesting is that this shift is happening because the LLMs underneath Cursor have improved to the point where they’re reliable for specific tasks.

There’s also a bit of a marketing boost when a founder or influencer highlights your product. Cursor saw a bump in interest after a few tweets, but it wouldn’t have sustained that interest without the capability to deliver. That capability, again, comes back to LLM improvements.

The Future of Building with LLMs: Looking Ahead

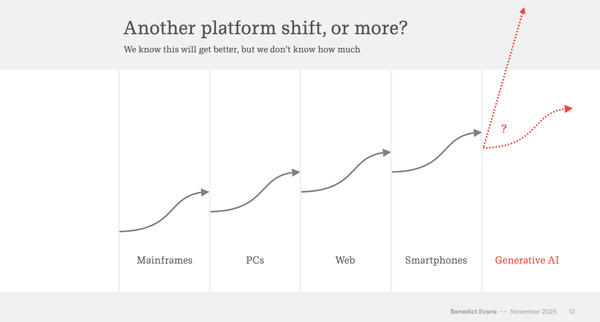

So, what does this all mean for developers and businesses? The real takeaway here is that LLMs are on a continuous improvement trajectory. What you can build today might be limited by the current capabilities of these models, but six months or a year from now, those same limitations could disappear. As the technology evolves, applications will get better, more efficient, and easier to build.

For instance, think about what you’re working on right now. Is it a simple chatbot, a document search tool, or maybe something more complex like an automated customer service app? Whatever it is, keep in mind that these tools are improving. What might seem challenging or even impossible today could become routine as LLMs get better at processing language, retrieving information, and making decisions.

The question isn’t just what you can build now, but what will be possible in the near future as the underlying technology catches up. As more companies like OpenAI and Anthropic continue to roll out updates, the landscape of what’s possible with AI will keep expanding.

Conclusion: Maximizing the Power of LLMs in Your Apps

As AI technology evolves, developers and businesses need to stay on top of these advancements. Whether it’s the introduction of file search capabilities or a doubling of token limits, each improvement in LLM technology opens up new possibilities for what applications can do.

OpenAI’s file search update reduces hallucinations, making responses more accurate and reliable. Claude’s increased token limit allows for more complex code generation and content creation. And tools like Cursor are benefiting from these advancements, enabling developers to build apps with minimal effort.

Looking ahead, the future of AI development is exciting. As LLMs continue to improve, the gap between what we can build today and what we’ll be able to build tomorrow will keep shrinking. So, the next time you’re developing an AI-driven app, remember that the underlying technology is evolving. What’s possible now is just the beginning.